Overview

Unity Catalog offers centralized access control, auditing, lineage, and data discovery features spanning Databricks workspaces. This blog articulates our approach to configuring Unity Catalog to govern both the data lake and SQL Server in a centralized manner across multiple environments. Establishing distinct governing policies and data security policies for each environment is crucial for effective management and securing your production data.

To incorporate SQL Server into Unity Catalog, we leveraged the Databricks Datalake federation feature. This involves configuring the necessary settings within Databricks to establish a federated connection with SQL Server. By doing so, Unity Catalog can centrally manage and govern data from SQL Server alongside other federated data sources. This integration ensures a unified approach to access control, auditing, lineage, and data discovery across the entire Databricks environment, including the SQL Server component.

The initial sections walk you through the process of setting up a Unity Catalog and creating data catalogs, while the final section delves into the challenges encountered and the corresponding solutions.

Requirements for Configuring Unity Catalog

- Require to be on the Premium plan of Databricks

- You must have permission to create data lake instance

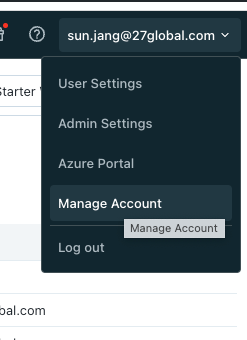

- You must have a Databricks Account Administrator in your cloud environment. This is different from Contributor/admin access to databricks instances. Confirm the access by logging into https://accounts.<yourcloud>databricks.net. Example below is from the Azure cloud environment.

- Create a new account admin in Accounts Console.

How to enable Unity Catalog

- You have to have Admin Account access to databricks

- Go to Manage Account option in databricks. This will take you to the Account Console page (https://accounts.azuredatabricks.net/)

- Create a metastore for unity catalog, One metastore per region

a. Create a metastore

b. Enable Delta Sharing to allow a Databricks user to share data outside their organization

c. Create an Azure Blob storage (gen2) or S3 bucket

d. Create an Access Connector for Databricks: Enable Managed Identity

e. Grant the storage contributor access to the Managed Identity - Assign a workspace to the metastore

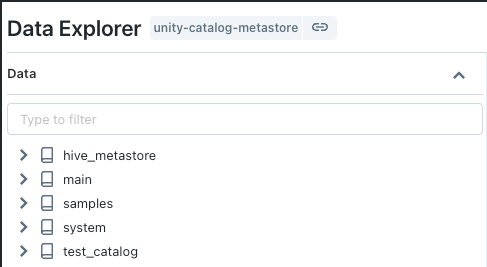

How to Create Data Catalogs

- Go to databricks workspace

- Cluster access mode must be Single user or Multiple users to use Unity Catalog

- Create a catalog with external location to your delta lake

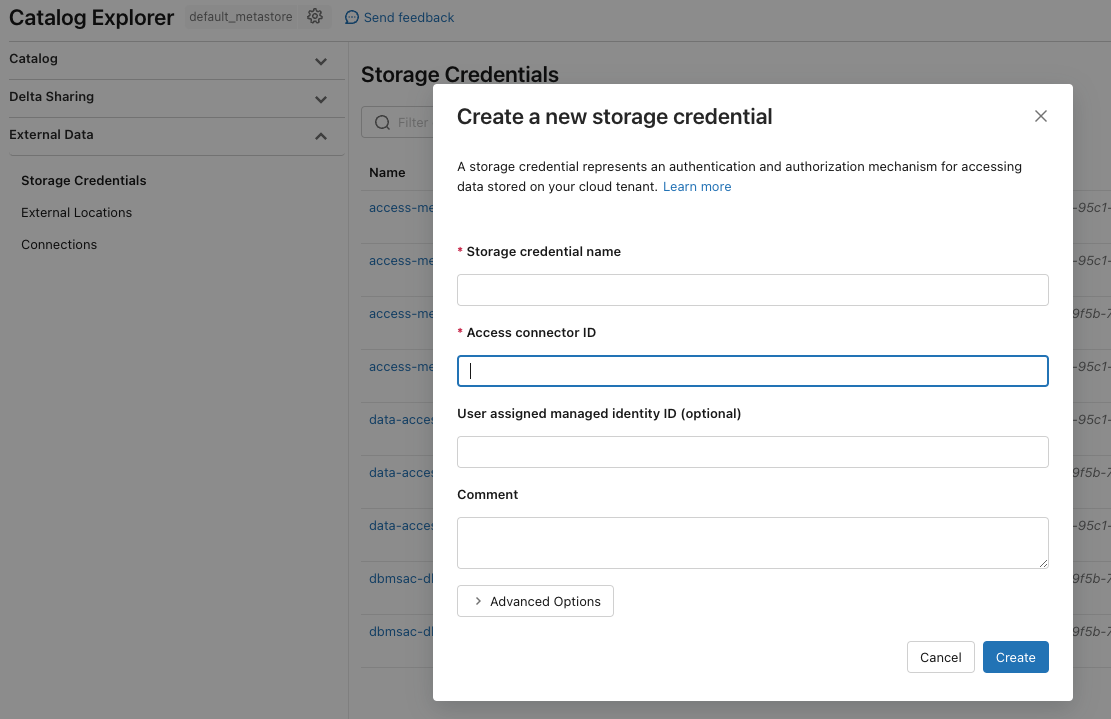

a. Add Storage Credential: use this credential to connect to external locations

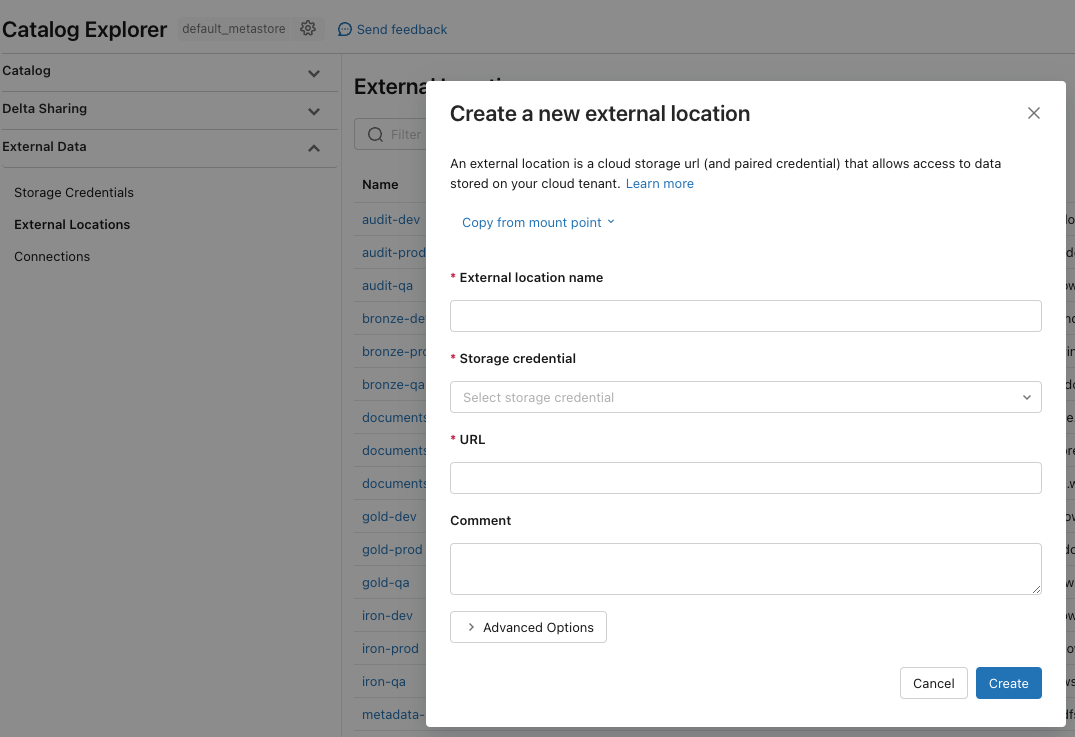

b. Add External Location: link to your data lake storage using the storage credential

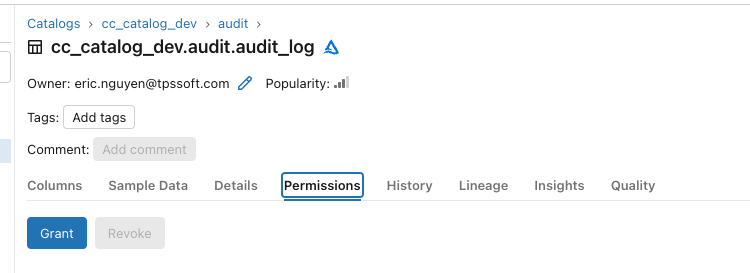

- Grant permission to the catalog and tables/volumes if needed

- Create a schema

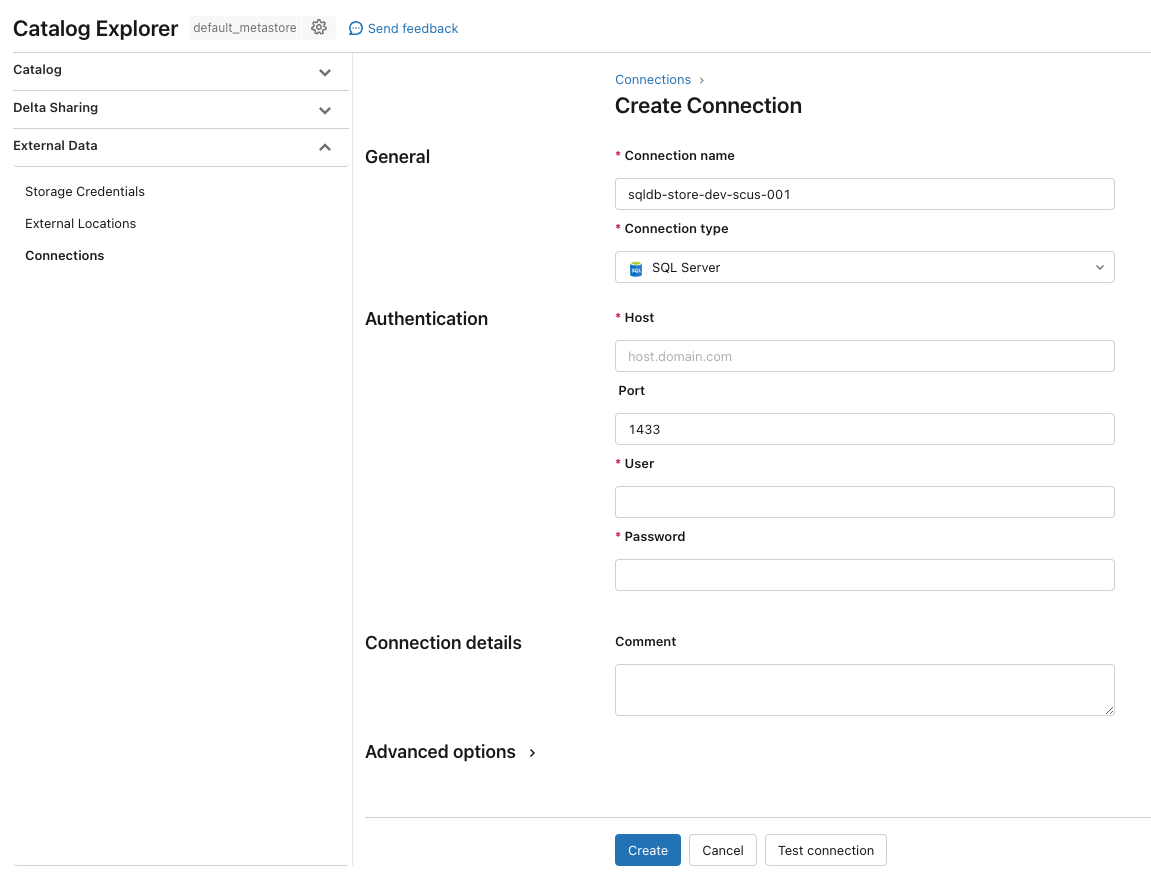

How to Setup SQL Server (Databricks Datalake Federation)

- Go to External Data -> Connections and create a connection to your external SQL database. Example below is to add SQL Server connection.

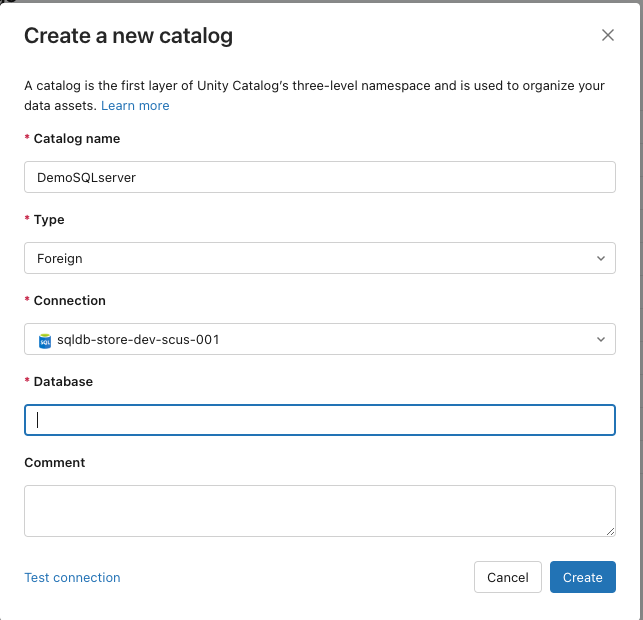

- Create a FOREIGN Catalog:

a. Go to Catalogs and Create Catalog

b. Enter catalog name and select type “Foreign”

c. Select the db connection created from step 1

- Your SQL Catalog will display in the catalog explorer and the notebooks should be able to access this catalog without configuring the connection from each notebook. Access is controlled by the unity catalog.

Governing your Data with User Groups

After establishing the catalogs, permissions can be configured at either the catalog or table level within both data lake catalogs and external catalogs (such as SQL Server). Best practices involve assigning user groups to each table with the relevant permission levels. Unity Catalog facilitates the synchronization of user groups configured in Microsoft Entra ID (formerly Azure Active Directory) when SCIM (System for Cross-domain Identity Management) is provisioned (reference: Configure SCIM provisioning)

Challenges

Our challenge with Unity Catalog revolved around its restriction to a single metastore (unity catalog metadata) per region, hindering the configuration of separate unity catalogs for each environment like development, QA, and production. Establishing distinct governing policies and data security policies for each environment is crucial for effective management and securing the production data.

To resolve this issue, we implemented a solution by establishing distinct catalogs for each environment (catalog_dev, catalog_qa, catalog_prod) within a consolidated unity catalog and applied different data governance policies. Furthermore, we organized the data in the corresponding subscription data lake for each environment.

The diagram below outlines the comprehensive architecture of Databricks multi-environments. This was achieved through the utilization of Terraform code to configure the unity catalog, workspaces, and data catalogs.

Summary

We successfully implemented governance for both the data lake and SQL Server using Unity Catalog. Our DevOps team automated these configurations through Terraform, allowing for a standardized deployment across multiple environments without the need for manual intervention. It is strongly recommended to keep production data separate from test data. Unity Catalog should not be viewed as a limitation in achieving this separation.

While Unity Catalog offers internal user management in Databricks, it is advisable to avoid redundant user creation or management. Configuring SCIM is highly recommended, as it enables synchronization of user management between Unity Catalog and your cloud environment, streamlining the process and maintaining consistency.